Why AI Detector Startups Might Never Make It: Three Critical Reasons These Products Are Nearly Worthless

Don't bet against a tide.

Introduction

When ChatGPT launched in November 2022, there was a rush to address concerns about AI-generated content. In response to the initial panic, a wave of AI detection startups emerged. These companies attracted significant investment and institutional interest, particularly from educational institutions. However, looking back now, I don’t think the products from these startups will hold long-term value. The best way for them to survive is to pivot because clouds don’t hold their rain forever—they either let it fall or dissipate. Similarly, clinging to a singular, short-lived purpose in a rapidly evolving space like AI is unsustainable. Startups need to recognize when the "rain" of interest and funding starts to wane and redirect their efforts toward a more enduring problem or opportunity.

Three Reasons AI Detector Products Are Worthless

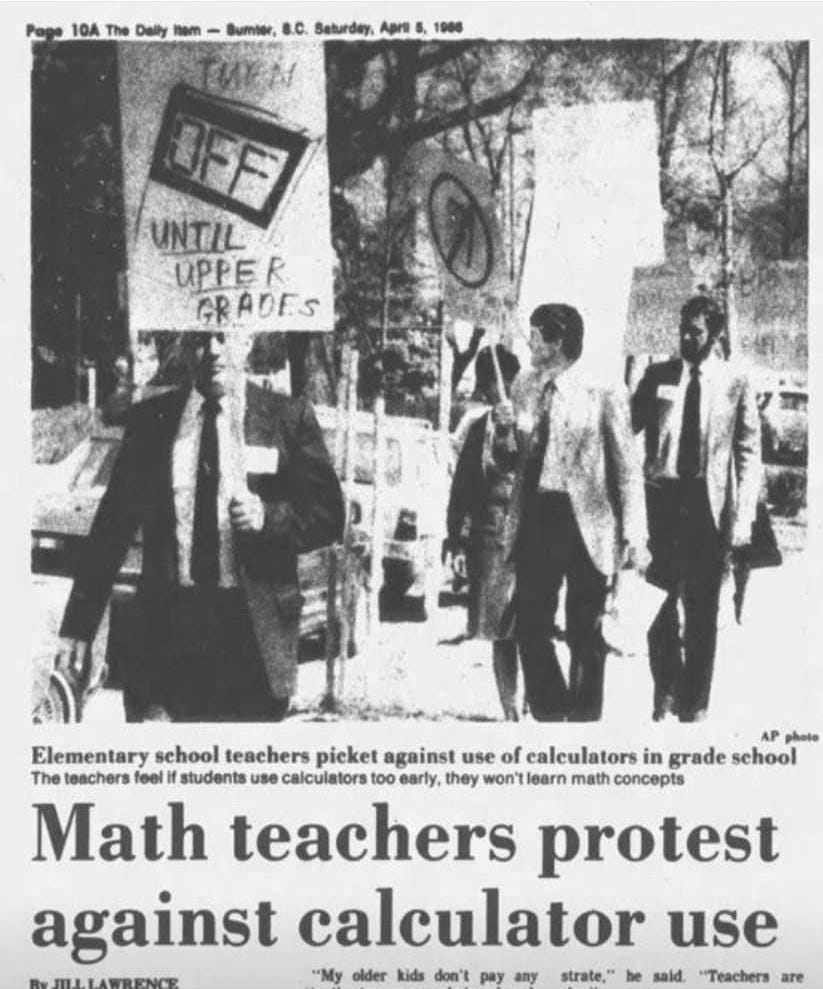

In April 1986, The Washington Post reported that math teachers staged protests against the use of calculators in classrooms. These educators marched with signs and placards, declaring that calculators would destroy the learning of math. They believed it would create a generation of students unable to perform basic arithmetic. The use of calculators was labelled as cheating, laziness, and deception.

Fast forward to 2024, and these moral judgments now sound almost comical. Smartphones come equipped with calculators, and no one questions your integrity for using one. So, what changed? Schools that initially banned calculators evolved. Teachers shifted their focus from pure computation to understanding the underlying principles of mathematics. Today, students are often required to show their work rather than just provide answers. The protests and moral judgments of 1986 seem quaint and old-fashioned in hindsight.

Where am I going with this? The current attacks and moral judgments over AI-generated content mirror society's initial reaction to calculators. This is a reminder of how society often resists technological change before ultimately embracing it.

I believe AI detection tools will eventually fall out of favour. The clamouring over whether content is AI-generated or not will fade as the world adapts and evolves. This pattern of adaptation has repeated itself throughout history with every new technology. Wikipedia, for example, faced widespread rejection before becoming a valuable lesson in source verification. Spell-checkers sparked concerns about declining writing skills before they became standard tools.

How do I know that AI detectors actually work? Yes, they claim to use advanced AI algorithms to analyse whether content is AI-generated or not. But how can I be sure that their claims are valid? Numerous articles and social media posts show that AI detectors often fail.

For example, an article published by Bloomberg in October 2024, which revealed that AI detectors falsely accuse students of cheating, even when they haven’t done anything wrong. Some weeks ago, I saw a LinkedIn post where an AI detector hilariously flagged the U.S. Declaration of Independence as 97% AI-generated. For anyone unaware, that document was written 246 years ago, long before the launch of ChatGPT—or AI in general.

If AI detectors can fail so blatantly, how are we supposed to trust their verifications? Perhaps they just want us to trust them because they’ve raised millions of dollars in funding. You know... trust me, bro. Even OpenAI, the creator of ChatGPT, admits that they cannot reliably detect AI-generated text.

This raises an obvious question: If OpenAI themselves acknowledge the limitations of detecting AI content, how can third-party detectors claim to have superior capabilities?

The AI detector startups are betting against technological progress and societal adaptation. This is ridiculous. Currently, AI tools like Claude and chatgpt are great at writing good enough articles. Most organizations and individuals just need good enough writings. Not like they hope to win a Nobel Prize or something. They just need a way to communicate quickly and effectively. But currently, AI detectors are not selling their tools to end users, they rely more on fear and compliance than demonstrable value. This is why both the social media and the research community as shown that AI detector tools are worthless and bias.

Conclusion

Do you know any company that detects usage of calculators in mathematics? They don’t exist. The current rush to invest in AI-generated content detection tools may prove to be backing the wrong side of history. They are competing against the tide. Like I mentioned earlier, the society adapted and moved past the initial panic to embrace these tools productively. Educational institutions will likely follow this well-worn path with AI: from current panic and prohibition attempts, through gradual acceptance, to eventually reinventing their teaching methods. The future of education won't revolve around catching AI use but rather teaching students how to use AI effectively while developing critical thinking, information synthesis, and original analysis skills. Just as mathematics evolved from testing computation to testing understanding, writing will shift from "Did you write this yourself?" to "Can you defend your arguments and demonstrate your thinking process?" The real opportunity lies not in detecting AI use, but in helping institutions and students navigate this transition, focusing on higher-order skills that remain uniquely human. The question isn't whether AI detection companies can perfect their technology – it's whether there will be a meaningful market for their services once society inevitably adapts to this new reality. If history is any guide, they're building for a future that's already passing them by. They are solving yesterday's problem while missing tomorrow's opportunity. Like Thanos said, AI is inevitable.

Great take here... AI detectors will fade away as the world stops caring if something is AI-generated.